Participation guidelines¶

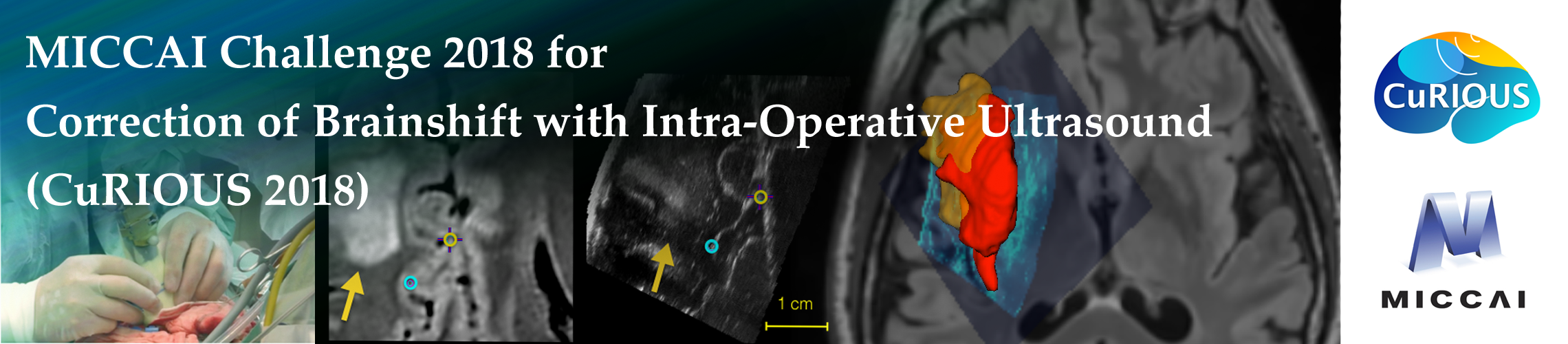

The first CuRIOUS 2018 Challenge will focus on brain shift correction prior to opening the dura since the initial tissue deformation typically set the tone for the remaining surgery. The challenge solicits robust and efficient MRI-iUS registration approaches to help correct brain shift.

A training multi-modality clinical datasets, with expert-labeled homologous anatomical landmarks, is already publicly available. Then a testing dataset will be distributed to the participants two weeks prior to the event, and the ranking based on the new dataset will be revealed during the challenge. During the event, the top teams will be invited to present their methods and results as oral presentations, and prizes will be awarded to the top 3 teams.

The CuRIOUS challenge requires all participating teams to submit a 4-page manuscript in LNCS format (same as the main MICCAI conference, with max 8 pages allowed) to describe their algorithms and report their results on the pre-released training dataset. All automatic and semi-automatic registration approaches are welcome. The submissions that describe the methodologies will be published in the MICCAI joint proceedings, and a summary report of the challenge will be released as a journal publication, including all participating teams as co-authors.

Only participants who submit their manuscripts will receive the test dataset for the final contest, and only those who submit the test data results and present their methods at the MICCAI workshop will be considered for the prizes and included in the follow-up publications. This means that at least one member of each participating team should register for the MICCAI Challenge.

Manuscript submission instruction¶

Please submit your manuscript via email to curious.challenge@gmail.com and in the body of the email, be sure to include the following:

- Manuscript title

- Corresponding author, affiliation, and email address

- Manuscript abstract

Please include your manuscript as a PDF document in the email, and make sure that the manuscript:

- contains concise but sufficient description of your method

- fully demonstrates the results of the training dataset

- is in MICCAI format with 4-8 pages

- is free of grammatical errors

The review process is not doube-blind.

Evaluation metrics¶

The registration methods will be evaluated using the expert-labeled anatomical landmarks of the challenge datasets.

For each case:

- The participants will submit the position of the transformed MRI landmarks after registration.

- The mean target registration errors (mTREs) will be computed between these landmarks and the ground truth ones defined in the before-resection iUS images.

Participants will need to indicate whether their method is fully

automatic or semi-automatic, and submit the resulting

transformations along with a small script used to warp the MRI landmarks

for the testing data.

Ranking¶

- For each case and each participant team: compute the mean TREs on the landmarks.

- For each case, rank the teams according to the mean TREs.

If two mTREs are less than 0.5mm different, the automatic method will be ranked higher.

If a case has not been uploaded or could not be processed for some reason, the team will be ranked last for this case. - Compute the mean rank of each team, which gives the final ranking of all participating teams.